Pharmacists and healthcare professionals operate in an increasingly complex medicines-information environment. Large Language Models (LLMs) and AI offer powerful tools to reduce information burden — but only when grounded in authoritative, regulated, and continuously updated data. This article explores how responsible AI, built on myHealthbox medicines information, can safely support clinical decision-making without replacing professional judgement.

The medicines information challenge

Every day, pharmacists, doctors, and other healthcare professionals (HCPs) make critical decisions under significant time pressure, balancing patient safety, regulatory compliance, and operational efficiency.

Key challenges include:

- Information overload — over 2 million authorised medicinal products worldwide

- Fragmented sources — PILs, SmPCs, safety alerts, recalls, and shortages across multiple systems

- Language barriers — prescriptions and packaging in unfamiliar languages

- Regulatory change — frequent updates to safety warnings and product status

- Supply shortages — requiring rapid therapeutic substitution

As a result, HCPs may spend 30–40% of their time searching for and verifying medicines information.

How LLMs and AI work

Large Language Models are trained on vast volumes of text to recognise patterns in human language. Rather than retrieving predefined answers, they generate responses probabilistically based on context.

In healthcare, this means:

- AI does not inherently understand clinical accuracy

- Outputs depend on data quality and governance

- Responses may appear authoritative while being incomplete or outdated

For medicines information, strong constraints, domain-specific grounding, and regulatory oversight are essential.

AI in healthcare: a decision-support role

AI systems in healthcare broadly fall into three categories:

- Generative systems — drafting text or summaries

- Diagnostic and decision-support systems — assisting professional judgement

- Operational efficiency systems — optimising workflows and resources

PharmaCopilot by myHealthbox is a decision-support system.

It supports pharmacists with information retrieval and interpretation — it does not make clinical decisions.

Common risks of AI in healthcare

False or misleading outputs

Generative AI can produce plausible but incorrect responses. Verification remains essential.

Data bias

AI may reflect gaps or bias in training data, potentially skewing outputs.

Patient confidentiality

Decision-support systems often require sensitive patient information, making data protection and GDPR compliance critical.

Why official and accurate data matters

Medicines information is highly regulated. Patient safety depends on accuracy, consistency, and regulatory alignment.

AI systems in this domain must rely on:

- Official, regulator-approved product information

- Up-to-date PILs and SmPCs

- Clear version control and traceability

- Transparent source provenance

Using unofficial or unverified sources introduces unacceptable clinical and regulatory risk.

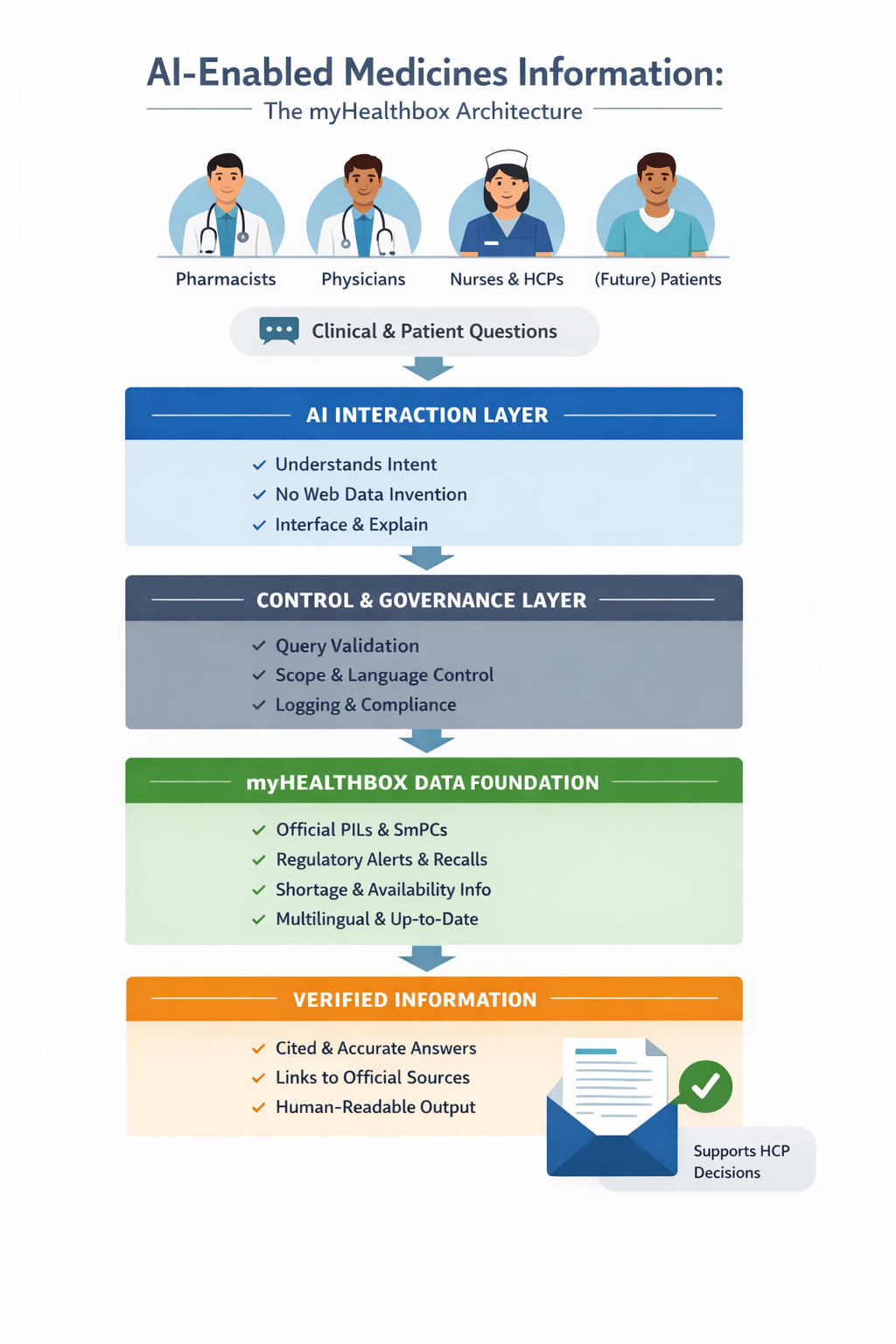

How an AI-based pharmacist service works with myHealthbox

myHealthbox maintains an extensive, curated repository of official medicines information.

PharmaCopilot uses AI as an access and interpretation layer, not as an independent decision-maker:

- Information is retrieved exclusively from validated myHealthbox sources

- Responses are grounded in official documentation

- Outputs are traceable to authoritative documents

- Regulatory updates are reflected in near real time

This reduces information burden without compromising safety or compliance.

AI does not make medicines information safer.

Authoritative data, governance, and transparency do.

Key advantages of a myHealthbox-based solution

- Regulatory reliability — official, approved sources only

- Single point of access — medicines data, alerts, recalls, shortages

- Multilingual support — consistent information across languages

- Reduced hallucination risk — constrained, validated outputs

- Auditability — traceable for professional and regulatory review

These characteristics align with emerging EU requirements for high-risk AI systems in healthcare.

Practical use cases in pharmacy

Complex medication review

Natural-language queries identify interactions, contraindications, and counselling points, all linked to official sources.

Shortage management

Therapeutic alternatives are surfaced with authorised indications and dosing guidance.

Multilingual patient communication

Patient-friendly explanations in preferred languages alongside professional summaries.

Regulatory awareness

Concise updates on recalls, safety alerts, and labelling changes.

Verifying AI responses

Effective safeguards include:

- Explicit source citations

- Direct links to official documentation

- Clear labelling of AI-generated content

- Logging and audit trails

- Escalation paths to human experts

Requirements for use

For patients

- Clear statements that AI does not replace medical advice

- Plain-language explanations

- Easy access to official sources

- Transparent data-handling practices

For healthcare professionals

- Clear definition of intended use

- Confidence in data provenance

- Workflow integration

- Access to original documents

References and legislation

Artificial Intelligence

- Regulation (EU) 2024/1689 — Artificial Intelligence Act

- AI Act Explorer

Health data governance

- Regulation (EU) 2025/327 — European Health Data Space

- European Commission EHDS overview

Privacy and data protection

Medicines regulation

- European Medicines Agency — Artificial Intelligence in Medicines Regulation

Key Takeaways

- AI and Large Language Models can significantly reduce the medicines information burden faced daily by healthcare professionals.

- In healthcare, AI must be grounded in official, approved and up-to-date data to be safe, trustworthy and compliant.

- Generic AI models are not suitable for medicines information without strong data governance and regulatory controls.

- myHealthbox provides a uniquely robust foundation for AI-enabled medicines information through its curated, authoritative data.

- When properly designed, AI can support pharmacists, prescribers, nurses and other HCPs, and eventually patients—without replacing professional judgement.

- Responsible AI in healthcare depends on transparency, traceability, human oversight and alignment with European regulation.